Re:Draw - Context Aware Translation as a Controllable Method for Artistic Production

In Proceedings of the 33rd International Joint Conference on Artificial Intelligence

TU Wien

CNR-ISTI

CNR-ISTI

TU Wien

Abstract

We introduce context-aware translation, a novel method that combines the benefits of inpainting and image-to-image translation, respecting simultaneously the original input and contextual relevance -- where existing methods fall short. By doing so, our method opens new avenues for the controllable use of AI within artistic creation, from animation to digital art.

As an use case, we apply our method to redraw any hand-drawn animated character eyes based on any design specifications -- eyes serve as a focal point that captures viewer attention and conveys a range of emotions, however, the labor-intensive nature of traditional animation often leads to compromises in the complexity and consistency of eye design. Furthermore, we remove the need for production data for training and introduce a new character recognition method that surpasses existing work by not requiring fine-tuning to specific productions. This proposed use case could help maintain consistency throughout production and unlock bolder and more detailed design choices without the production cost drawbacks. A user study shows context-aware translation is preferred over existing work 95.16% of the time.

BibTeX

Please, do not forget to cite our work:

@inproceedings{ijcai2024p842,

title = {Re:Draw - Context Aware Translation as a Controllable Method for Artistic Production},

author = {Cardoso, João Libório and Banterle, Francesco and Cignoni, Paolo and Wimmer, Michael},

booktitle = {Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, {IJCAI-24}},

url = {https://doi.org/10.24963/ijcai.2024/842}}Explainer Video

Stress Test

Additional Media

Our method can be used to increase the amount of detail in character's eyes. Note that a higher amount of detail doesn't necessarily translate to better or more appealing artwork. Simplified designs are sometimes used as a stylistic choice. But they may also occur due to technical constraints. Our method opens the option to have higher detail if so desired, without increasing production time.

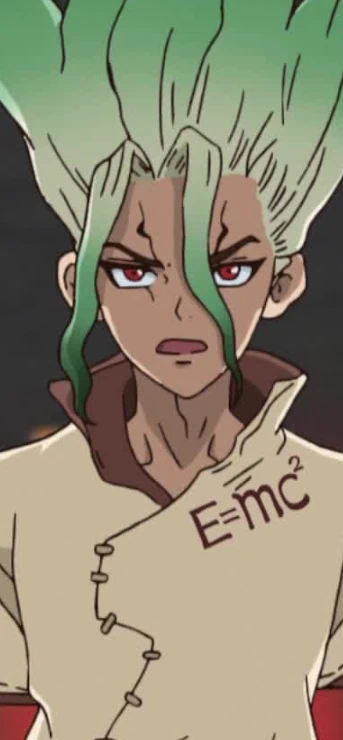

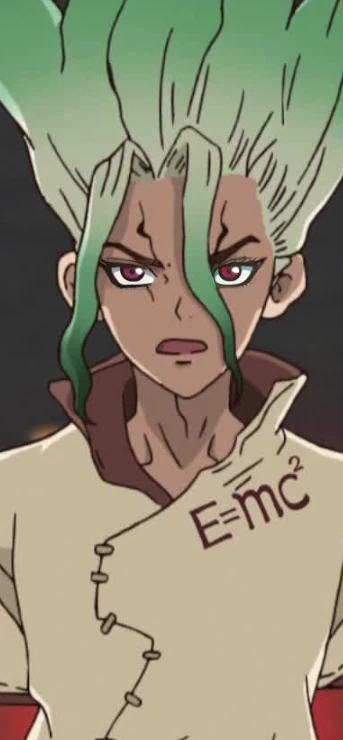

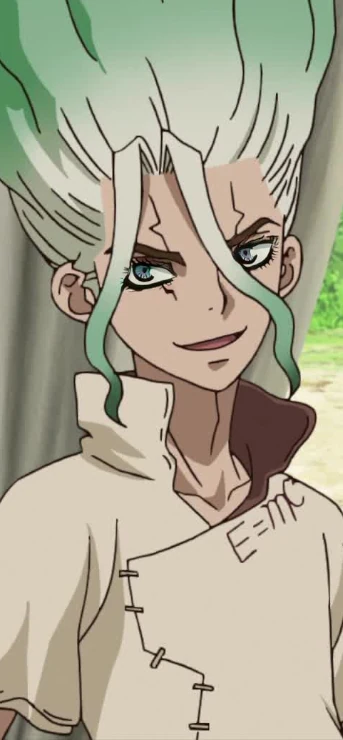

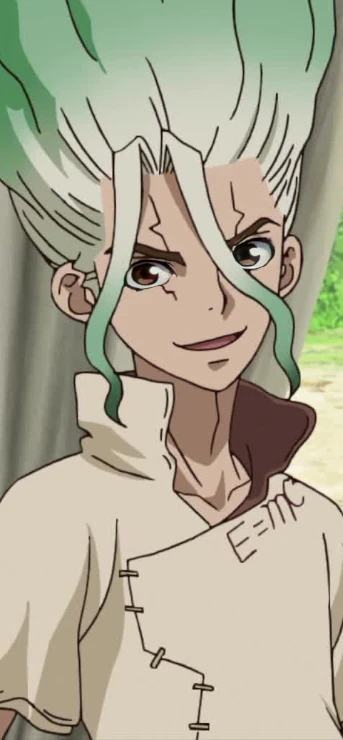

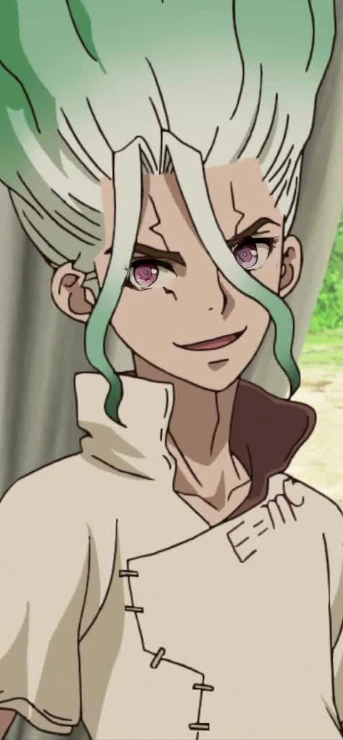

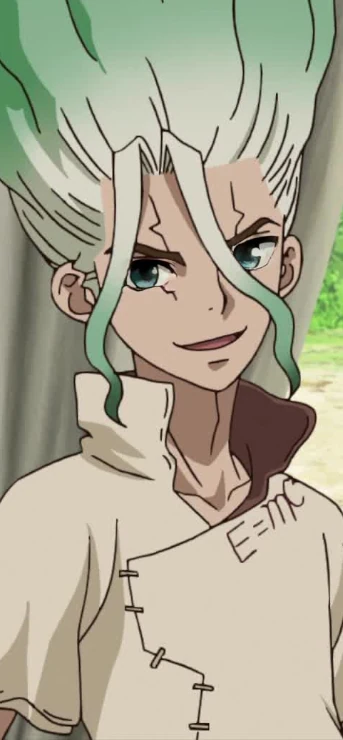

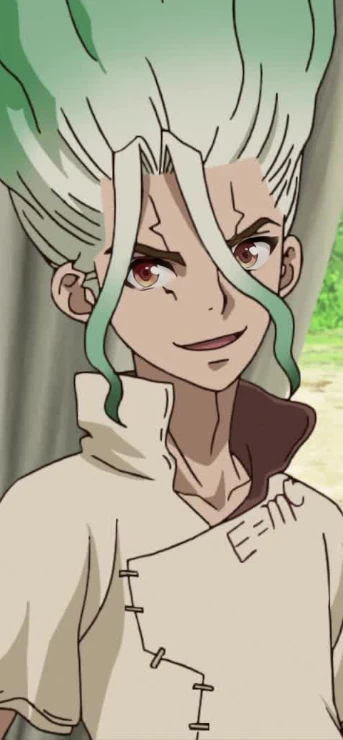

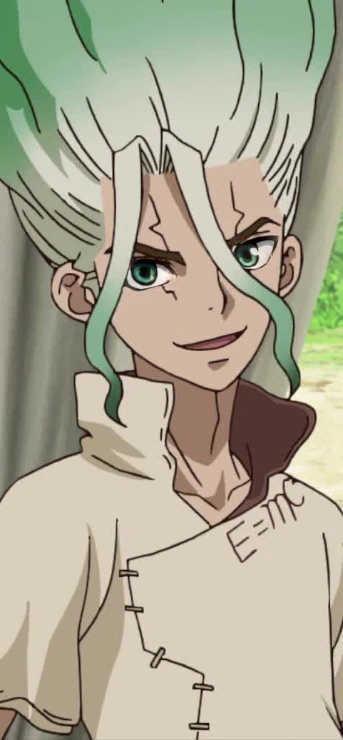

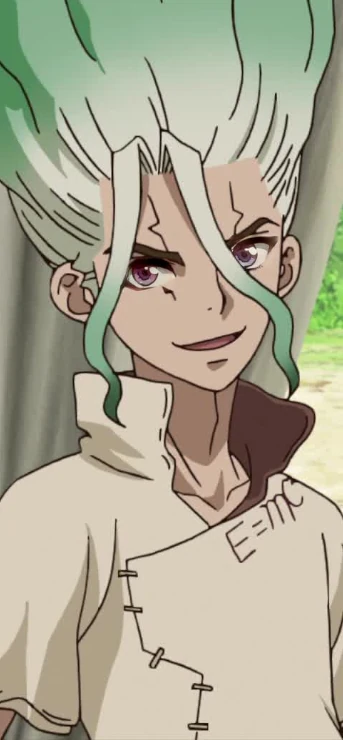

We present examples from a diverse set of anime series, including Kobayashi's Dragon Maid, The Ancient Magus Bride, Idolmaster: Xenoglossia, Dr.Stone, Eden of the East, Darling in the Franxx and Wotakoi:

A side-effect of how our proposed networks are trained is that our method is also capable of applying entirely different designs to characters. While not the focus of our work, it demonstrates the generalizability of our method and the robustness of the learned models:

In these examples featuring Kobayashi's Dragon Maid, The Ancient Magus Bride, Angels of Death, Re:Zero and Dr.Stone, we demonstrate that our method is robust enough to handle characters in a variety of challenging scenarios. This includes dealing with atypical facial proportions, high-resolution imagery, oblique camera angles, head tilts, irregular lighting conditions, and occlusions caused by hair:

Acknowledgements

We would like to especially thank Jarret Martin for teaching us about the animation pipeline needs, his continuous feedback throughout the course of our research and help running the industry survey. We would also like to thank Tonari Animation and OtakuVS for offering us the production data and other elements from their works Otachan and Second Self for illustration of the research, which we did whenever possible.

Finally, we'd like to thank some of the first author's students for their contributions during the early stages of this project, in particular Yanic Thurner, Felix Kugler, and especially Dominik Hanko, who worked more closely with this topic.

All copyrighted content belongs to its original owners. Characters, music, and all other forms of creative content are owned by their original creators, and we make no claim to them. This content was made in compliance with Fair Use law for the purpose of illustration of non-profit scientific research.